AMD vs Nvidia at CES 2026: Two different AI chip moves

AMD and Nvidia used CES 2026 to redraw the AI-chip battlefield. While AMD is pushing AI everywhere, from PCs to embedded edge, Nvidia is doubling down on full-stack AI supercomputers for hyperscalers.

Nvidia (NVDA) is trading near the top of its 52‑week range in the high‑$180s to low‑$190s, after a 2025 run powered by data-centre GPU demand and hyperscaler AI capex. AMD (AMD) has logged ~70% 1‑year gains, but still trades at a discount to NVDA on price‑to‑sales despite investors increasingly treating it as “AI beta with catch‑up potential.”

AMD: “AI everywhere” from PC to accelerator

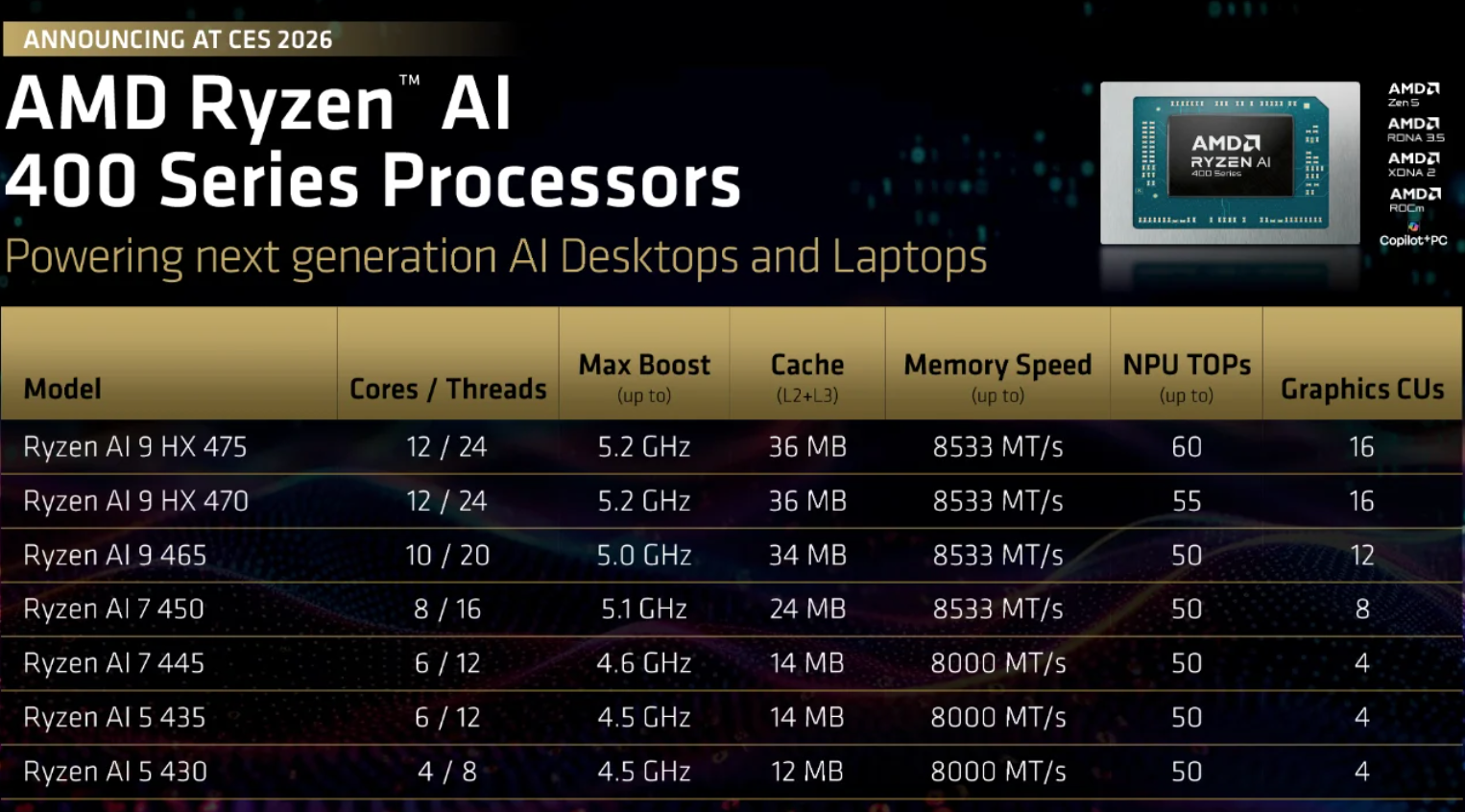

At CES, AMD expanded its Ryzen AI portfolio with new Ryzen AI 400 / AI Max+ laptop chips, as well as a fresh Ryzen AI Embedded line built on Zen 5, targeting automotive, industrial, and “physical AI” deployments. Management is explicitly pitching the PC install base as a distributed AI edge, with OEM designs expected to ramp through 2026.

On the data-centre side, AMD is extending its MI300/MI455 accelerator roadmap, positioning these GPUs as lower-cost, more open alternatives to Nvidia for training and inference at scale, with coverage flagging OpenAI-type customers as realistic adopters. For trading desks, AMD screens as a classic “share‑gain story”: smaller installed base, but significant operating leverage if ROCm, MI‑series wins, and Ryzen AI attach rates come through.

Nvidia: doubling down on AI supercomputers

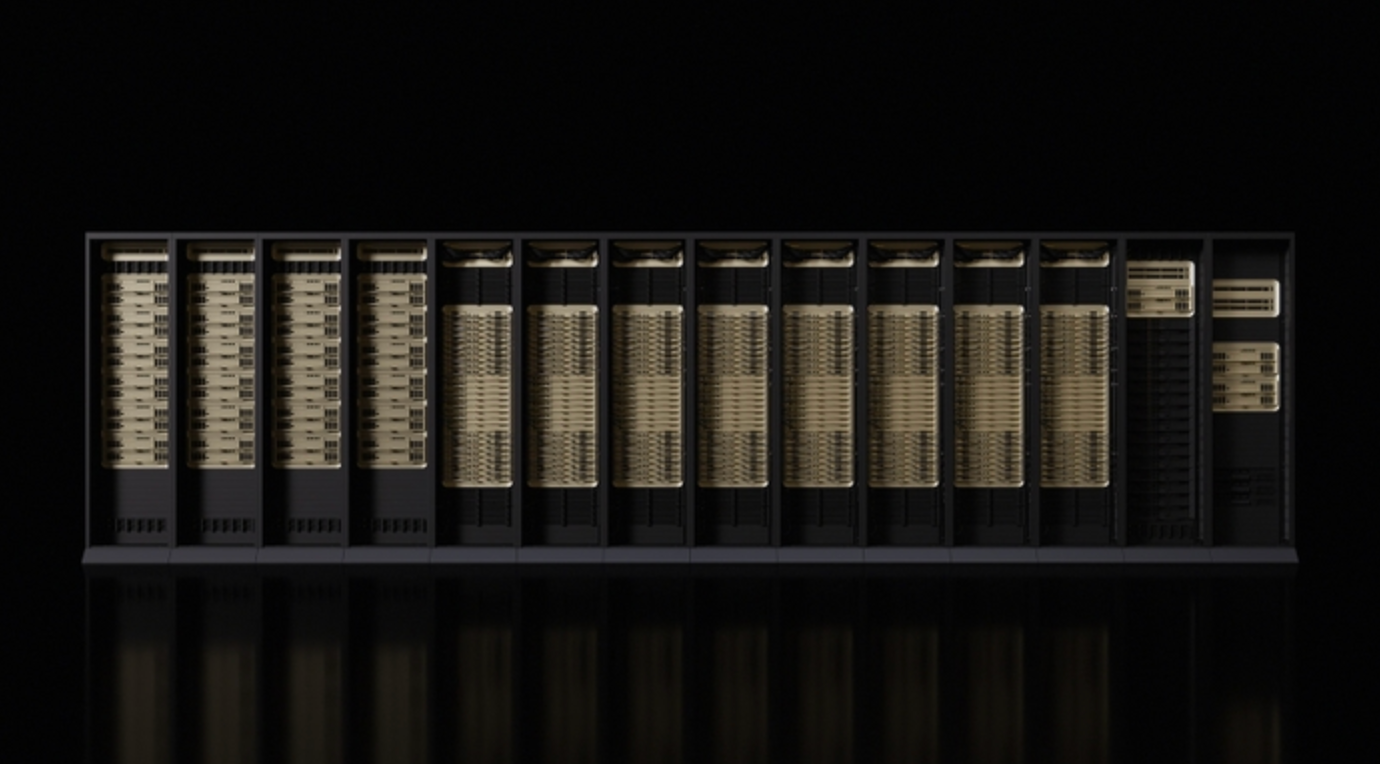

Nvidia answered with the Rubin platform - six new chips, including Rubin GPUs, Vera CPUs and updated NVLink 6 / Spectrum‑X networking, sold as a turnkey AI supercomputer stack.

Rubin is explicitly aimed at “AI factories” for advanced models and agentic workloads, with first systems due in the second half of 2026.

Crucially, Rubin is being rolled out with all four major hyperscalers (AWS, Azure, Google Cloud, Oracle Cloud) and specialist GPU clouds, reinforcing Nvidia’s role at the centre of AI infrastructure spend. From a trading perspective, NVDA remains the de facto AI index: richly valued, but underpinned by multi‑year cloud capex; any visible shift toward custom ASICs or slower AI budgets is the key risk to the current multiple

Why it matters

CES 2026 underlined that the AI trade is entering a more demanding phase. The easy narrative - “AI equals GPUs equals upside” - is fading. What matters now is where AI workloads actually land, how durable capital spending proves to be, and which vendors retain pricing power as inference, efficiency and deployment take centre stage.

Nvidia’s strategy reinforces its position at the core of hyperscaler AI budgets, but that concentration cuts both ways. As training matures and inference scales, margins are likely to compress and competition - from AMD, custom silicon, and cloud-native alternatives - will intensify. Execution risk is rising just as valuations remain elevated.

AMD, by contrast, is leaning into breadth rather than dominance. Its “AI everywhere” approach positions it to benefit if AI adoption spreads beyond mega-scale data centres into PCs, industrial systems and embedded use cases. For markets, that makes AMD less about outright leadership and more about incremental share capture across a widening AI surface area.

In short, CES confirmed that AI is no longer a single-trade story. The next leg will be shaped by deployment economics, not just compute ambition.

Strategic read‑through for the AI‑chips trade

CES 2026 confirms that neither vendor is selling bare chips anymore; both are shipping platforms - silicon, plus interconnect, plus software ecosystems (CUDA vs. ROCm) and reference systems.

For investors, the core questions are now: who wins incremental hyperscaler workloads, how much pricing power survives as AMD, custom silicon and regulatory pressure ramp, and how durable AI capex is through the next macro slowdown.

Within that framework, Nvidia remains the high-conviction core AI infrastructure exposure, while AMD offers higher-beta upside if its “AI everywhere” strategy delivers real share gains in accelerators and PC/edge AI over the next 12– 24 months.

Key takeaway

CES 2026 highlighted a clear strategic divergence. According to analysts, Nvidia is a high-conviction, system-level bet on hyperscaler AI infrastructure, but with rising sensitivity to inference economics, pricing pressure and macro conditions. AMD offers higher-beta upside through its push to embed AI across PCs, edge devices and alternative accelerator stacks - a riskier path, but one with meaningful leverage if adoption broadens over the next 12–24 months.

For investors and traders, the AI-chip trade is evolving from a momentum story into a selectivity trade, where platform stickiness, cost efficiency and workload mix matter as much as raw performance.

AMD and Nvidia technical outlook

AMD is stabilising after a volatile pullback from the $260 highs, with price consolidating around the $223 area as buyers cautiously step back in. While the broader structure remains range-bound, momentum is improving: the RSI is rising smoothly above the midline, signalling a gradual rebuild in bullish conviction rather than a sharp risk-on surge.

From a structural perspective, the $187 support remains a key downside level, with a break below likely to trigger liquidation-driven selling, while the deeper $155 zone marks longer-term trend support.

On the upside, the $260 resistance continues to cap the recovery, meaning AMD will need sustained buying pressure to confirm a renewed trend higher. For now, price action suggests consolidation with a mild bullish bias, rather than a decisive breakout.

NVIDIA is attempting to stabilise after its recent pullback, with price reclaiming the $189 area and moving back toward the middle of its broader range. The rebound from the $170 support zone has improved short-term structure, while momentum is beginning to turn constructive: the RSI is rising sharply just above the midline, signalling strengthening buying interest rather than a purely technical bounce.

That said, upside progress remains capped by resistance at $196 and the key $208 level, where previous rallies have triggered profit-taking. As long as NVDA holds above $170, the broader structure remains intact, but a sustained break above $196 would be needed to confirm a more durable bullish continuation.

The performance figures quoted are not a guarantee of future performance.